Face recognition technology emulates human performance and can even exceed it. And it is becoming increasingly more common for it to be used with cameras for real-time recognition, such as to unlock a smartphone or laptop, log into a social media app, and to check in at the airport.

Deep convolutional neural networks, aka DCNNs, are a central component of artificial intelligence for identifying visual images, including those of faces. Both the name and the structure are inspired by the organization of the brain’s visual pathways—a multilayered structure with progressively increasing complexity in each layer.

The first layers deal with simple functions such as the color and edges of an image, and the complexity progressively increases until the last layers perform the recognition of face identity.

With AI, a critical question is whether DCNNs can help explain human behavior and brain mechanisms for complex functions, such as face perception, scene perception, and language.

In a recent study published in the Proceedings of the National Academy of Sciences, a Dartmouth research team, in collaboration with the University of Bologna, investigated whether DCNNs can model face processing in humans. The results show that AI is not a good model for understanding how the brain processes faces moving with changing expressions because at this time, AI is designed to recognize static images.

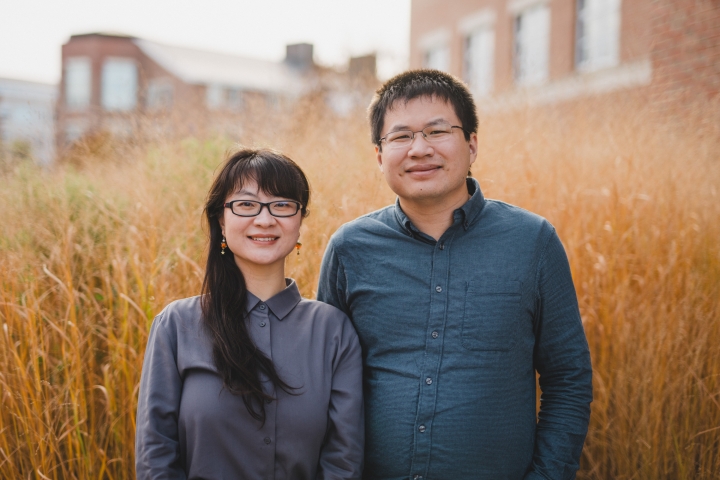

“Scientists are trying to use deep neural networks as a tool to understand the brain, but our findings show that this tool is quite different from the brain, at least for now,” says co-lead author Jiahui Guo, a postdoctoral fellow in the Department of Psychological and Brain Sciences.

Unlike most previous studies, this study tested DCNNs using videos of faces representing diverse ethnicities, ages, and expressions, moving naturally, instead of using static images such as photographs of faces.

To test how similar the mechanisms for face recognition in DCNNs and humans are, the researchers analyzed the videos with state-of-the-art DCNNs and investigated how they are processed by humans using a functional magnetic resonance imaging scanner that recorded participants’ brain activity. They also studied participants’ behavior with face recognition tasks.

The team found that brain representations of faces were highly similar across the participants, and AI’s artificial neural codes for faces were highly similar across different DCNNs. But the correlations of brain activity with DCNNs were weak. Only a small part of the information encoded in the brain is captured by DCNNs, suggesting that these artificial neural networks, in their current state, provide an inadequate model for how the human brain processes dynamic faces.

“The unique information encoded in the brain might be related to processing dynamic information and high-level cognitive processes like memory and attention,” explains co-lead author Feilong Ma, a postdoctoral fellow in psychological and brain sciences.

With face processing, people don’t just determine if a face is different from another, but also infer other information such as state of mind and whether that person is friendly or trustworthy. In contrast, current DCNNs are designed only to identify faces.

“When you look at a face, you get a lot of information about that person, including what they may be thinking, how they may be feeling, and what kind of impression they are trying to make,” says co-author James Haxby, a professor in the Department of Psychological and Brain Sciences and former director of the Center for Cognitive Neuroscience. “There are many cognitive processes involved which enable you to obtain information about other people that is critical for social interaction.”

“With AI, once the deep neural network has determined if a face is different from another face, that’s the end of the story,” says co-author Maria Ida Gobbini, an associate professor in the Department of Medical and Surgical Sciences at the University of Bologna. “But for humans, recognizing a person’s identity is just the beginning, as other mental processes are set in motion, which AI does not currently have.”

“If developers want AI networks to reflect how face processing occurs in the human brain more accurately, they need to build algorithms that are based on real-life stimuli like the dynamic faces in videos rather than static images,” says Guo.